XR Hack: My Experience at the Mixed Reality Hackathon

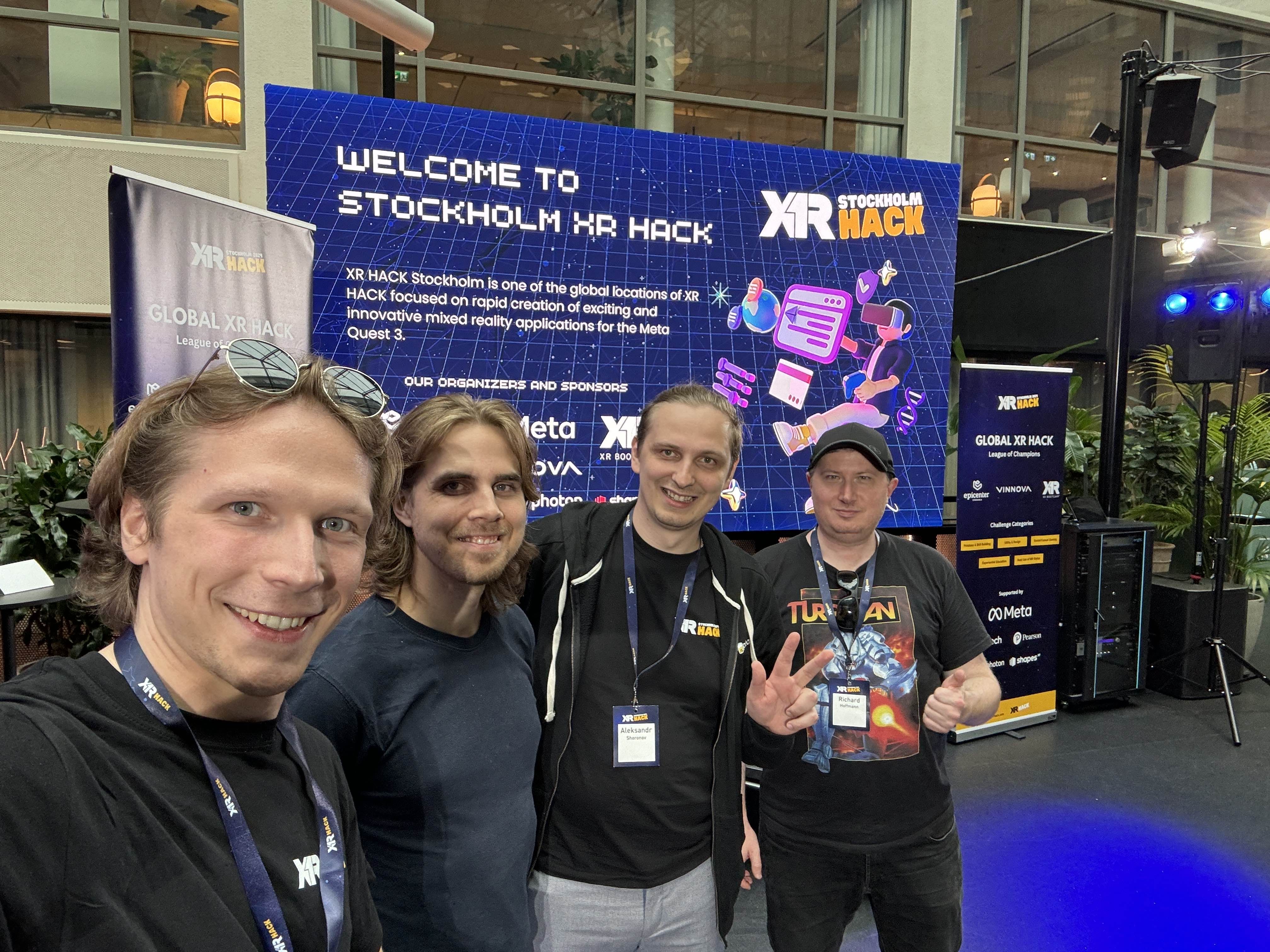

One month ago, I participated in three days of XRHack in Stockholm. It's the hackathon related to the development of games and applications in mixed reality. The hackathon was organized in partnership with Meta, so you can guess that the main hardware and technology stack of the event was Quest and Mixed Reality SDK, correspondingly.

I bought my headset this spring, and I became curious about the development of the applications for it. I tried to develop some small projects, but I didn't have enough time to make something serious.

When I decided to go to this hackathon, I thought that I would be a solo developer and I would do my own project by myself, but the reality was different: when I came to the venue, I realized that solo projects are not allowed, so I had to find a team. I was scared of it because I'm not an experienced Unity and C# developer, and it's almost one stack mainly used nowadays for development. Yes, there is still WebXR, but it's not mature enough for quick prototyping, which is important for hackathons.

During a team-building session organized by the facilitators, all lonely participants were provided with markers and asked to jot down their ideas on whiteboards. I was prepared to grab a marker, but to be honest, I wasn’t ready to pitch my project. I wrote down “AR Origami training with game mechanics” and started a conversation with another participant. Suddenly, my attention was drawn to the smiley face of the person who had written nearby on the same whiteboard, “Airport tower simulator.” I was a huge fan of the airport simulator when it was a popular mobile game, and I was thrilled to find someone who shared my interest in porting it to XR. The game’s concept is simple: you’re an airport dispatcher tasked with landing all incoming airplanes by drawing landing tracks on the screen by touching and moving your finger. I found it courageous to ask the guy to join his team, and he agreed. I warned him that I wasn’t an experienced developer, but he was okay with that and said I’d have a lot of fun and gain valuable experience. And he was right.

Our team of 5 people was formed by four developers, including me and one sound designer. We started to discuss high-level details, and more importantly, the name, which was the most difficult part of the hackathon. We decided to name our project "Crazy planes" and started to work on it.

During the evening, I was frustrated with connecting my headset to my laptop for debugging. I was already under stress because I felt responsible for quickly learning and developing something useful for the project to prove that I’m not a liability to the team. After a couple of hours and ten reboots of my headset, I finally managed to connect it because it was a tricky bug that prevented the headset from showing the confirmation dialog for ADB connection. Finally, I was able to join the development. During the briefing, I suggested that I could develop the logic for the planes to appear randomly from different spots on the room walls, which aligns with our project plan.

Currently, on Meta Quest devices, you can scan your room and mark walls, tables, and other objects in the room, and your applications can be aware of all of that geometry and use it for the game or application logic. For example, you can place a chessboard only on the table or show a window for the outer world only on the wall. To be honest, the SDK is not so mature, and during a hackathon, you have access to already outdated documentation when you decided to choose the latest version of Unity packages from Meta, and the only source of the truth is the C# source of sample scenes and attached scripts. Another problem that I faced is that my headset rejected seeing the scanned room, and I could not access any wall meshes during real-life testing. I had to borrow a headset from a teammate to test my logic, and I was able to make planes appear from the walls.

I had a draft project that I developed after taking the course from Unity, but I was still curious about the experience of developing a game in a more realistic environment. So, during the hackathon, I kept attention to the nuances of the game development in the team. As I expected, because you construct scenes with Unity Game Objects in the GUI mostly, during storing the project code, one of the hardest parts is keeping out of conflicts from VCS like Git. And I learnt how important it is to develop the logic of your Game Objects in isolation as much as possible with the power of interfaces and events to minimize the conflicts. So, all active participants in the team were responsible for different parts of the game, and all of us had our own duplicate of the main scene, and all of us were developing our code in our own scenes, and we shared our results with prepared Prefabs and scripts. Prefabs can be compared with classes in OOP. You can have multiple instances of the same Prefab in the scene, and all of them will have the same logic, which is very useful for the game development. For example, you can have a Prefab of the plane and you can instantiate it on the scene with different parameters like color, speed, etc.

And also, I remind you that we had a sound designer in the team. He was responsible for the sound effects and music of the game. He was working in parallel with us and was able to provide us with the sound effects and music in time. He worked near me with a synthesizer and during a hackathon, he crafted our own soundtrack, which was brilliant in my opinion: it was calm and had a good contrast with the gameplay. I was impressed by his work and realized that sound design is a very important part of game development. During the final end of the development, he was able to make good sound effects for the game and we were able to integrate them into the game, again by the flexibility of Unity and its GUI. Also, because Meta Quest and their SDK have good support for spatial sound, we were able to make sound effects to be heard from the direction of the plane, which was very cool.

My teammates developed a logic for marking the track of the planes, which was the most important part of the game. They used the same approach of developing the logic in isolation, and they were able to make a good logic for the game. I was impressed by their work and realized that I still have a lot of things to learn in Unity and C# development. I also developed a logic for the crashing of the planes, and I also worked a little bit as a 3D designer of our towers and runaways. One of the organizers of the event was the creator of Sloyd, which is a platform for generative 3D models, and I was able to provide us with dynamic tower designs generated by third-party AI. I also, closer to the end of the hackathon, implemented a logic for game scoring.

By the way, we had a neighbour team, who actually decided to go with WebXR to develop the application with a virtual gallery of images on the walls of your room. You can come to the frame and dictate to the headset microphone what you want to see inside the frame, and AI will generate an image inside of the frame. It’s cool that, despite my scepticism, WebXR can be used for prototyping during a hackathon.

We were able to finish the game to the state enough to present it, but we didn't win any prizes, unfortunately. But I was happy that I was able to participate in the hackathon and I learnt a lot and definitely had a lot of fun. So, if you think that you are not skilled enough and experienced enough to participate in the hackathon, I can say that it's not true. You can find a team and you can have a lot of fun and experience. And also, you can make new connections, which can be useful in the future for your career.

- Previous: C/2023 A3 Comet: A Celestial Spectacle in October

- Next: Results of 2024